A sunny day, unblemished by a single cloud in the sky. A masterful painting with not one stroke out of place. An hour of watching Jimmy Fallon without phones ringing, the door buzzing, or insipid ads playing in a loop. These are what I imagine perfection to be in my head.

Most website owners I know tend to add their websites to that list. I would beg to disagree.

Admittedly, you don’t need to hire a designer or even a developer anymore to build a half-decent website. Tools such as Weebly (great for launching themed blogs in an instant),Spaces (the latest for online stores with payment systems and landing pages), and the like eagerly await the DIY entrepreneur. Not one for quick fixes? There are tons of developers who can design as well as designers who also code that you can turn to.

But there’s always something that even the most meticulous developer can overlook. Nine times out of ten, that overlooked bit relates to SEO.

Now, SEO audits are nearly as common as broken websites. So are in-depth articles about how to perfect every little bit that helps move your rankings and conversions that critical half-inch further. And while everyone is up-to-the-minute on the most recent changes to the Google algorithm, where it’s headed, and how to stay on top of it, there remain some simple issues webmasters tend to ignore over and over again.

To get an idea of how long these problems (and their solutions) have existed, I suggest you go to Google and try to find the earliest times they were mentioned and discussed. Chances are, you’re taken back to when SEO wasn’t even a known term. And when you’re done reading, pick any 10 sites at random, and see if they aren’t making at least two of these seven mistakes. (highlight to tweet) If even one isn’t, I’d like to hear about it!

Grab a notepad, tick off all the gaps you see in your own site from the list below, and get to work on fixing them.

Slow and Steady Doesn’t Win Any Races

Users have officially run out of patience. Yes. The maximum page load time that the average user allows is just two seconds, post which they are onto greener (and faster) pastures. Since page load speed is so key to user experience, it’s obvious then thatGoogle uses site speed as an SEO ranking factor—not a huge one, but definitely an important one. Tools like Pingdom (with a great waterfall display) and GT Metrix (with verbose details of code corrections) help you analyze your site’s speed and discover reasons why it loads slowly.

These are, of course, over and above Google’s very own Page Speed Insights (for mobile and desktop) that is a must-checkout.

Forgetting Image Tags

It’s nice to have a website with brilliant images that add to the content and make it more engaging for the user. However, all the images that you and I can see on a website are pretty much invisible to the average search bot (read: Google bot—Bing bot manages). Enter image tags.

Ensure every single image on your website is optimized to be searched and indexed by search spiders by including ALT tags on each image. An image description combined with a caption that goes under the image are all bonuses in helping search bots know what they’re crawling. Just make sure you don’t stuff your ALT tags with keywords hoping to see your images rise to the top of the pile. Instead, go with real descriptions that may include keywords only if they are appropriate.

Not Researching Long Tail Keywords

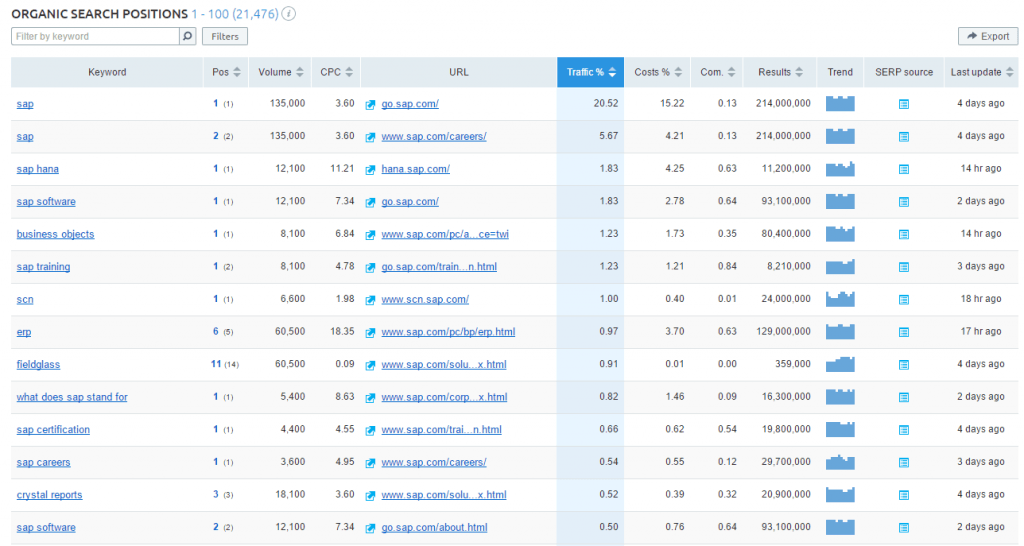

You probably already create content and optimize your site for your top keywords and phrases. However, a significant number of search requests on most websites have very little to do with your exact keywords, and actually are variations of the same. Check if this is the case with your website, too, with a keyword analytics tool of your choice. SEMrush is one of the ideal tools to do this. It looks into your organic as well as paid keywords and reports traffic and search volumes, trends, related keywords, and competitors’ data on them.

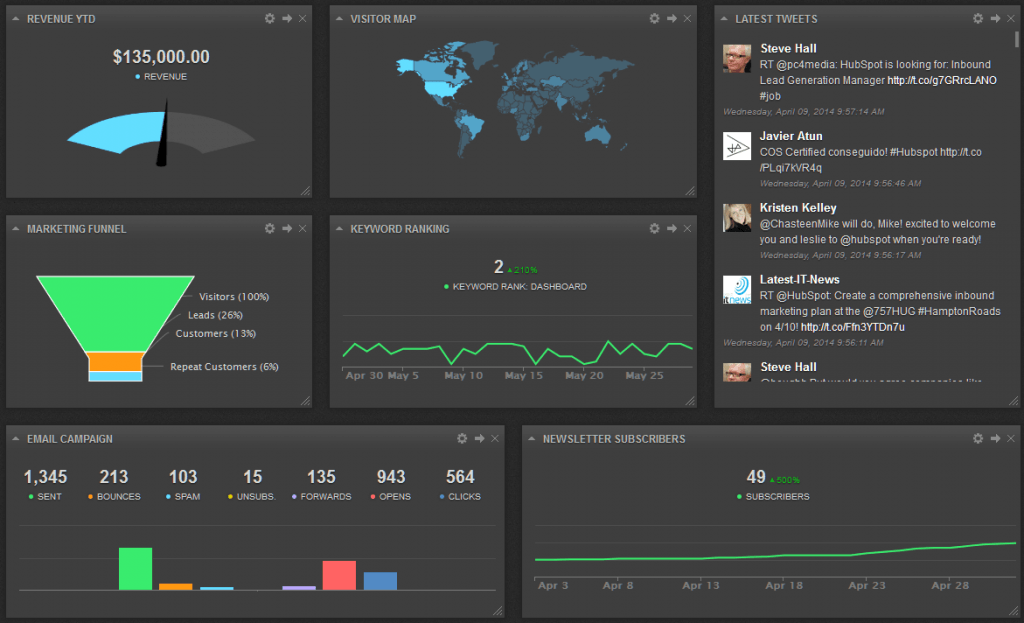

To make sure that you’re not wasting huge resources barking up the wrong tree, make sure you put your keyword analytics in perspective with your data from Google Analytics, your social media management suite, your email marketing platform, and more. An all-in-one marketing dashboard like Cyfe is a good idea to get a bird’s eye view of your data from multiple sources in one glance.

Ignoring Local SEO

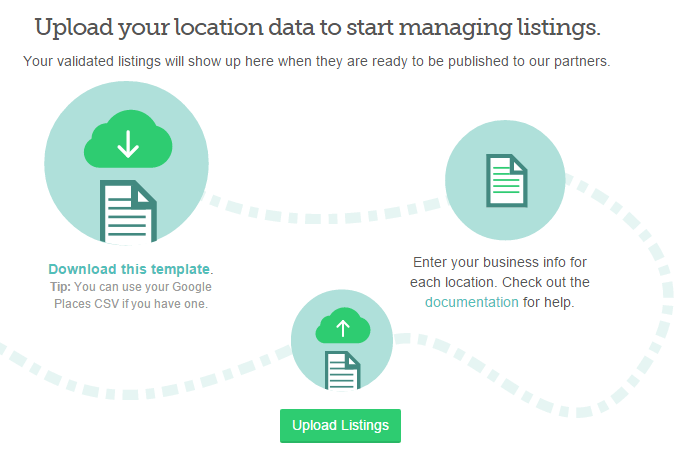

If your business serves customers at a physical location, it’s got to have local SEO. Why?Because people tend to Google for the address, opening hours, or phone number of a business establishment before making a visit. You don’t want to miss out on a customer wanting to spend some cash at your store, do you?

With a comprehensive tool like Moz Local, first discover where you currently stand with respect to citations and listings across the web. Once you know which directories, websites, and listings you’ve missed out on, get to building your profile on those sites right away.

A consistent NAP format and profile is essential for getting the complete benefit of local SEO.

Missing XML Sitemap

Do you have one? If not, get one. Why? Because it tells Google and other search bots exactly what pages to index as well as the hierarchical relationship between individual pages. This is very useful when it comes to ranking well on pages that may be three or more levels deep on the site.

The sitemap you create for search engines must be in XML for them to be able to make sense of it. It does not hurt to have a sitemap for human visitors to your page, either. That way, when a user can’t find a particular page on your main site navigation, they can dive deeper into your site map and discover it there. Only this time, make sure people like us can read it by creating it in HTML.

No Robots.txt

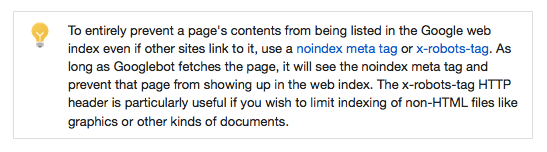

Just as a site map tells search engines what pages they should index, a robots.txt file tells them what not to. This is critical, especially if you host internal data on your domain name and would not want it visible to the average user on the web.

Typically, a “disallow” command used with the right syntax is enough to block Google bots from indexing a specific URL. However, if there are other sites that link to the URL which have not been blocked from Google, there’s a small chance that the page might still surface. In such a case, follow Google’s instructions:

Not Using 301 Redirects

A 301 (permanent) redirect is what you call it when you type an old URL, and your browser automatically takes you to a brand new, updated URL for the same website. “301” is actually the HTTP response returned by the server. This is a standard option adopted by websites when they are in the process of rebranding themselves, but don’t want to lose out on their existing clients and site authority.

The 301 redirect tells search engines that the content in question has moved to a new URL and that you should pass on all the link authority to the new URL. However, it’s not necessary for you to rebrand your site for a 301 redirect. In cases where the content of a page has changed drastically or removed altogether, you are advised to use a 301 redirect.

A 404 HTTP code (broken link) is a bad experience for your visitors, and a 302 HTTP code (temporary redirect) is a bad experience for Googlebot. And Google doesn’t take kindly to either.

Start Fixing

A regular site audit is an easy way of detecting such glaring gaps in your SEO and fixing them ASAP. If you haven’t been doing site audits so far, get into the habit now. Your site and its search visibility will thank you for it.

To view the original article Click Here

No comments:

Post a Comment