Tons of new ads are created each year worldwide, so how do you know yours will make the grade? The answer is to test, measuring your ad copy/design against possible alternatives to determine which iteration produces the best results.

But there are lots of myths and mistakes being made when it comes to testing PPC ad copy and design. This article will bust some of those PPC myths/mistakes and help you improve your overall PPC revenue. If you’re super smart, you’ll also incorporate winning test data into other aspects of your online marketing like SEO, website copy, Facebook advertising and more.

You can be a winner at PPC advertising if you take heed and avoid the pitfalls of A/B testing below.

Myth #1: You Should Test Everything All The Time!

It’s true that you should do lots of testing – but it’s very important to take your time and develop a testing plan!

Many folks feel the need to get a running start and to test every element they can come up with, and this is exacerbated by the availability of inexpensive testing tools. Their mantra is “Start testing right away!” or “Test everything all the time!”

My advice is slow down and plan out your testing. The goal is to really consider what you’re testing and why you’re running a test. Here are some examples of what I track for each test (I do this in an Excel spreadsheet):

- Testing Goal. What is the goal of this particular test? An example might be to determine the most valuable free shipping threshold offer.

- Testing Execution. What elements am I going to test to achieve my testing goal? For the free shipping example, you might test alternative threshold values and the messaging of the free shipping offer.

- Technical Level Of Execution. How much technical work will this test require? The folks responsible for the landing page can directly make comments on this part of the spreadsheet.

- Creative Level Of Execution. How much creative work will this test require? The folks responsible for the creative could add suggestions here.

- Test Executed? This lets folks know the test was deployed.

- Test Evaluation. What were the results of the test? How did the test version fare against the baseline? Following our example above, you might note that there was an increase in conversion rate with no negative impact on shipping costs.

- Iterated Upon? We’ll talk more specifically about iteration later in this article.

Without a plan, you may be able to periodically hit the “testing ball” out of the proverbial park, but your testing efforts will not be forward-moving and iterative. Another big mistake is that companies don’t keep track of their tests and often run the same tests. A solid testing protocol or system prevents this.

Myth #2: A Large Majority Of Tests Kill It!

The sad reality is that most people spin their wheels when it comes to testing, and the deck is stacked against them from the get-go.

Based on my own research and experience, I would say that tests are more likely to fail — that is, have no meaningful impact at all, positive or negative. Furthermore, of the small minority of tests that do have a notable result, about half will negatively impact the bottom line!

To add more salt to the wound, very few tests with a positive impact really “knock it out of the park.” The deck is stacked against testers that don’t know what they’re doing!

Myth #3: Move Along From Loser Tests Fast!

Given that many tests don’t yield positive results, it’s important to iterate these tests. You’ve probably learned something — it’s just a matter of uncovering what. Don’t completely disregard the info learned from the test and throw the baby out with the bathwater. Keep tweaking your tests and you’ll find a winner.

Here are some suggestions on how to find gems in tests that don’t move the needle:

- Run a focus group, and ask folks what they expect from a particular page, from your company, from your advertising. There’s huge insight in talking to folks. Not all info/insight is in the data that we collect.

- If working with clients (you are a consultant or work agency side), it’s a good idea to get their ideas related to products, as they know their goods and audience best. Then, work to put your marketing twist on it.

Myth #4: Boring Pages Don’t Convert!

Wrong! Boring pages actually convert better.

Never, never include any “flash whiz-bang” elements on your page. It sounds obvious, but you’d be very surprised how often this happens.

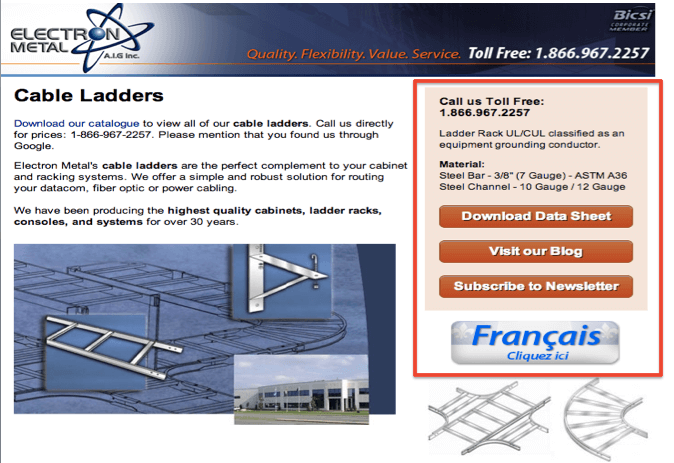

In the image below, I’ve highlighted some of the best elements to test on a page.

These are some of the page elements I really like to include (and test) on landing pages:

Headline. This should tie into the keyword terms you’re targeting in the account. In this case, it’s “soundproof windows.”

Company Info. Tell folks who you are and why they should buy from you. In this example, the text says, over 20,000 windows installed in 5000 homes and businesses.

Image. This relates to the product or service that you’re selling.

Benefit Statements. These should be in bullet list form. People often don’t read pages, but rather scan them — so bullet points are more easily absorbed by your visitors.

CTA (in this case, a form). One call-to-action (CTA) per page. Don’t do this type of thing:

You’ll notice that the page above presents many options for visitors: download data sheet, visit our blog and subscribe to our newsletter. There’s even the option to see the page in French for gosh sakes!

Select a single conversion event and have the event relate a little more directly to the sale of your product. In this case, it would have been better if the advertiser had included a lead form on the page for interested folks to leave their contact details, and have a sales rep call them back.

Testimonials. It’s great to show that you’ve had some satisfied customers and to reinforce your overall value propositions. In this example, the testimonials reinforce that the company’s custom window work (value prop) is bar none.

Credibility Indicators. These provide “street cred.” In this case, the sources are NY Magazine and a very popular report written in the window industry.

Myth #5: But So-And-So Ran The Test And It Worked!

This is the biggest sin of them all, and I hear it all the time when I’m speaking at conferences. Run fast if you hear things like this to justify testing:

- I absolutely know this works as I've seen our major competitor do it!

- A credible blog and/or source wrote about it, so it must work!

- My friend who’s been in the industry for 5 years runs the test all the time!

- I’m the target market and I truly understand what people are looking for!

- My colleague tried it and it did super well!

- I went to Harvard and I know this is right test to run!

Generally speaking, the above mentality leaves companies with a long list of testing ideas, but no idea if they’re focusing on the “right” tests. If they happen to have a “right” test, they don’t know it and are unable to prioritize it. To avoid this, use the pointers in this article.

The big takeaway is to have a testing spreadsheet. This way, you’ll continue to move forward without leaving anything out, and you won’t waste time rerunning the old tests you’ve run before. And you won’t be as tempted to go down the “so-and-so ran the test” perilous path.

To view the original article Click Here

No comments:

Post a Comment